Why ThreeShield clients weren't affected

The thousands of successful ESXiArgs ransomware attacks exploiting unpatched VMware ESXi virtual machines in February 2023 illustrate the benefits of proactive information security management. Our managed security clients weren’t affected because our proactive approach:- disabled the vulnerable CIM SLP systems starting over 8 years ago

- applied patches that would have fixed the problem if CIP SLM wasn’t disabled over two years ago

- uses layers of protection that limit and protect direct access to sensitive systems like VMware ESXi and HyperV hypervisors

- monitors files that the ransomware script changed

- backs up VMware, HyperV, servers, workstations, Google Workspace, and Microsoft 365 off-site to Canadian data centres

- monitors server backups for ransomware

Reacting now shows deeper systemic problems

If you or your IT provider are having to react to the news of these attacks that required a well-publicized patch to be missing for over two years for a protocol that shouldn't have been enabled or exposed to the Internet, it means that the VMware issues in your organization are just the tip of a much more dangerous iceburg of problems stemming from a lack of proactive holistic information security controls.A holistic IT controls-based approach to prevent being hit by the ESXiArgs ransomeware

You may want to adopt ThreeShield’s holistic approach to IT and Information Security, which includes the following standard practices that prevented our clients from being affected:Ensure that all software and licenses are current.

When we start providing holistic IT and information security services for a new client, we often encounter unsupported VMware ESXi versions. In some cases, existing hardware doesn’t support an upgrade; however, typically, nobody was watching the systems after the initial installation and updates fell behind.

In many cases, we can save businesses the cost of VMware ESXi, vCenter, vSphere, etc. upgrades by moving to Hyper-V or other services. In other cases, paying to upgrade VMware makes sense. Coincidentally, in February 2021, when VMware released the fix for the problem that the ESXiArgs ransomware exploits, we had a few clients due to renew their VMware licenses. The problems that VMware had in correcting the problem were part of the reason why we moved some of these clients to Hyper-V at the time. The ESXiArgs ransomware campaign targets VMware ESXi servers running versions older than 7.0 before ESXi70U1c-17325551, 6.7 before ESXi670-202102401-SG, 6.5 before ESXi650-202102101-SG (CVE-2021-21972, CVE-2021-21973, CVE-2021-21974)

Another benefit of maintaining software licenses is that we use software license renewals as an opportunity to evaluate business needs and secure alternatives.

ThreeShield can support your license management and review systems missing patches for you. Click here to get started

Employ a risk-based patching process to make sure that critical patches get applied immediately and computers have a reboot window every week.

We also have processes in our daily, weekly, and monthly checklists to make sure that we catch any patches that may have slipped through the cracks.

Using our process, affected ESXi instances received the patches that prevent ESXiArgs ransomware in February 2021.

It’s critical to apply these patches now; however, if you are just applying patches to block this attack or those that your auditors mention instead of implementing a sustainable holistic patching process, you will likely become vulnerable again within a month.

Click here to have ThreeShield manage your patches and find vulnerable systems

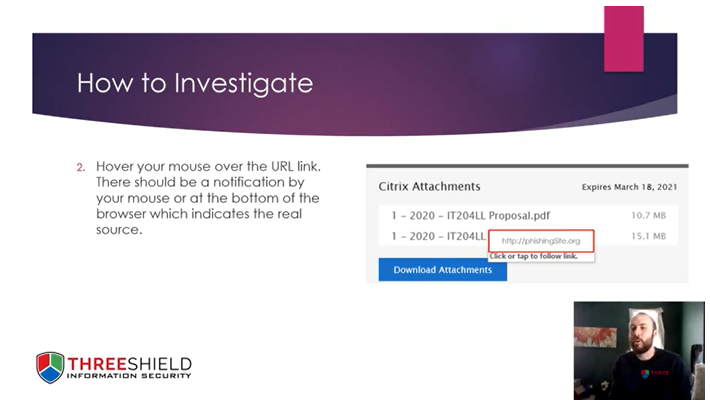

Block all Internet traffic except for the minimum required for business purposes.

In this case, none of our managed clients have ports 27 or 427 open to the Internet. However, when we start working with a new company, we often find vulnerable systems such as ESXi and Network Attached Storage (NAS) devices exposed to the Internet.

Limit all network ports for internal services to specific devices and require additional multi-factor authentication before authorized devices can access them.

If we had port 427 open on hosts, we would restrict its access.

Use host-based firewalls to limit internal attacks.

In this case, using the ESX firewall ruleset to limit access is another best practice that we follow with the following SSH command (also listed in the disabling process below):

esxcli network firewall ruleset set -r CIMSLP -e 0

ThreeShield can also manage your cloud, network, and host-based firewalls. Click here to get started

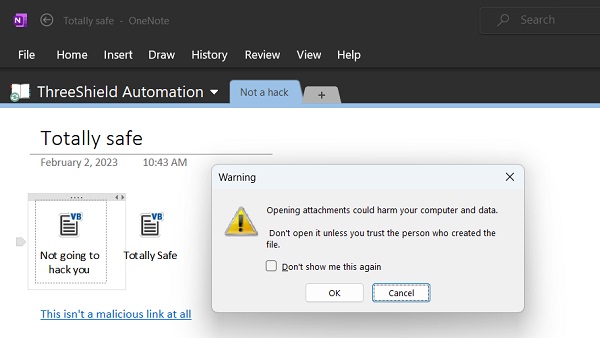

Limit and monitor potentially dangerous filetypes

In particular, scripts (in this case, encrypt.sh), executables, and old Office formats.

Monitor operating systems for file changes.

In this case, catching changes to files with a .sh extension, the motd file, and .html files that are changed in this attack would all have stopped or limited the damage.

We're happy to support your server and workstation security monitoring efforts. Click here to get started

Run endpoint managed detection and response tools on all operating systems, including Linux and hypervisors.

This would have also prevented the execution of the encrypt.sh file that ESXiArgs uses.

Yes, Mac and Linux also need endpoint detection and response (what we used to call antivirus). These tools now also tell us about intrusions, breach footholds (that let the bad guys get back into your systems), and other risks. Click here to get ThreeShield to support your server and endpoint security

Backup all systems outside of your production network using systems that aren’t affected by network worms and other ransomware attacks that typically wipe out local backup systems.

Many of the affected companies were using backup services like Comet, Veeam, and virtual versions of backup systems that were running in the same VMware environment as the one affected by the ransomware attack. As such, the backups were also affected. Similarly, relying on snapshots on the same system isn’t a sustainable backup methodology.

If you aren't sure about your backup configuration or need support choosing the right solution, click here to reach out to us

Remove unnecessary system tools and bloatware bundled with other software.

In this case, the ESXiArgs attack deletes the /store/packages/vmtools.py file, which includes additional backdoors.

Disable unnecessary services.

The service in question for this vulnerability is CIM SLP, which vSphere uses to discover hardware inventory and to report some hardware health for ESXi hosts. Since we have additional health monitoring tools in place and SLP has had a lot of vulnerabilities, we disable this service after initial setup.

This decision was strengthened by remote code execution vulnerabilities discovered in 2019, 2020, 2021:

- VMSA-2022-0030 (CVE-2022-31699)

- VMSA-2021-0014 (CVE-2021-21995)

- VMSA-2021-0002 (CVE-2021-21974)

- VMSA-2020-0023 (CVE-2020-3992)

- VMSA-2019-0022 (CVE-2019-5544)

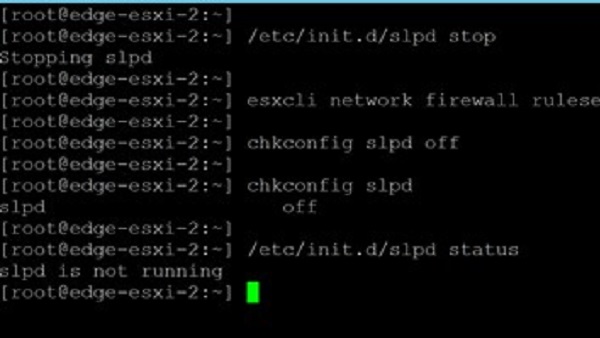

How to Disable SLP on an ESXi host

If you haven’t disabled SLP and don’t require it, you can do so by completing the following steps (for more information and current VMware instructions, please see https://kb.vmware.com/s/article/76372):From vCenter:

- Log into the vCenter and select an ESXi host

- Click Services on the left side under Configure and look for SLP

- If you don’t see SLP, use SSH (detailed below). If you do see it, choose SLDP and click Stop then Ok

- Edit the Startup Policy and deselect Start and Stop with host before clicking Ok

From SSH:

- Log into the ESXi host via SSH (which should be limited to specific internal devices only)

- Check if the SLP service is in use with the following command:

esxcli system slp stats get - If SLP was running, stop it with the following command:

/etc/init.d/slpd stop - Disable the SLP service with the following command:

esxcli network firewall ruleset set -r CIMSLP -e 0 - Make sure that SLP won’t start after a reboot with the following command:

chkconfig slpd off - Check to make sure that the change will be applied after reboots with this command:

chkconfig --list | grep slpd

follow the vCenter instructions above, but start SLDP and change the policy to start and stop with the host.

You can also do it through SSH with the following commands:

- Enable the ruleset:

esxcli network firewall ruleset set -r CIMSLP -e 1 - Change the configuration:

chkconfig slpd on - Check to make sure it worked:

chkconfig --list | grep slpd - Start the SLP service:

/etc/init.d/slpd start

How to recover from ESXiArgs

If you were affected by this attack, you may want to review the procedures documented at https://gist.github.com/MarianBojescu/da539a47d5eae29383a4804218ad7220 to attempt recovery:------------------------------------------------------- CryptoLocker attack CVE-2020-3992 Workaround for data recovery for linux VMs ------------------------------------------------------- References: - https://kb.vmware.com/s/article/1002511 - https://www.simplified.guide/linux/disk-recover-partition-table Step 1: Create a new Virtual Machine on the same EXSI host that was affected. The following examples use Debian 10 Step 2: SSH login into the affected ESXi host Step 3: # Go to your datastore. cd /your/datastore/mount/point # Make a copy of the affected VM mkdir OldVm_Recovery cp OldVm/* OldVm_Recovery/ -r Step 4: cd OldVm_Recovery # List all files $ ls -la -rw------- 1 root root 123456789 Feb 3 08:47 old-vm-flat.vmdk # Create a new VMDk file $ vmkfstools -c 123456789 -a lsilogic -d thin temp.vmdk // replace 123456789 with the size from ls -la output # Remove temp-flat.vmdk rm temp-flat.vmdk # Rename the newly created temp.vmdk # The neme must be the same as flat file, without "-flat". ec.: - Flat file old-vm-flat.vmdk - WMDK file: old-vm.vmdk mvtemp.vmdk old-vm.vmdk # Edit vmdk file vi old-vm.vmdk ---------------------------------------------- # Change this line from RW 209715201 VMFS "temp-flat.vmdk" # To RW 209715201 VMFS "old-vm-flat.vmdk" ---------------------------------------------- # Delete this line ddb.thinProvisioned = "1" ---------------------------------------------- Step 5: # Check the vmdk file, not *flat.vmdk $ vmkfstools -e old-vm.vmdk if everithing is o,k go to the next step :D Step 6: # add old-vm.vmdk as additional disk to the Linux VM created at "Step 1" # Login into the vm and install testdisk tool $ apt install --assume-yes testdisk Now follow the procedure from https://www.simplified.guide/linux/disk-recover-partition-table in order to rebuild partition table if you are lucky, now you can mount that disk and recover data from it.

What our clients say about ThreeShield

CTO, Tilia Inc. (Financial Technology and Online Payments)

" ThreeShield has employed a dynamic, risk-based approach to information security that is specific to our business needs but also provides comfort to our external stakeholders. I recommend their services. "

IT Architect, Financial Technology and Online Retail

" Collaborating with ThreeShield to ensure data security was an exciting and educational experience. As we exploded in growth, it was clear that we needed to rapidly mature on all fronts, and ThreeShield was integral to building our confidence with information, software, and infrastructure security. "

IT Security Director, Linden Lab (Virtual Reality)

" ThreeShield helped us focus our efforts, enhancing our security posture and verifying PCI compliance.

All of this was achieved with minimal disruption to the engineering organization as a whole.

The approach was smart. In a short time, we accomplished what much larger companies still struggle to achieve. "

Senior Director of Systems and Build Engineering

" ThreeShield very much values active and respectful collaboration, and went out of their way to get feedback on policies to make sure proposals balanced business needs while not making employees feel like they were dealing with unreasonable overhead. By doing so ThreeShield really helped change the culture around security mindfulness is positive ways. "

Popular Technical Articles

29 January 2024

13 February 2023

2 February 2023

16 January 2023

26 March 2021